How to perform Hand-Eye calibration with a Robot and why it is necessary?

Hand-eye coordination serves the single purpose of relating robot motion to camera vision

Hand-eye coordination, in the context of robot vision, is a form of calibration that serves a single, crucial purpose: to define the precise geometric relationship between the robot’s coordinate system and the camera’s coordinate system.

Unlike standard machine vision calibration, which only relates pixels to real-world units, hand-eye coordination enables the system to answer the question, “If the camera sees an object at this pixel location, where exactly must the robot move its tool to interact with it?” This is essential for robot guidance.

The Two Primary Scenarios

The procedure for hand-eye coordination varies depending on the mounting of the camera, leading to two main scenarios:

Robot Guidance

Camera is Mounted Above the operating frame and is therefore in a static position with the Robot moving relatively to it. The objective is to map the camera's fixed view onto the robot's coordinates. The robot must be taught where it is in the camera's view.

Robot Pick-and-Place

Camera is Mounted to the Robot Arm and is therefore moving with the Robot. The objective in this case is to determine the exact offset and rotation between the camera's lens and the robot’s tool center point (TCP). The robot must know where the camera is relative to its own 'hand.'

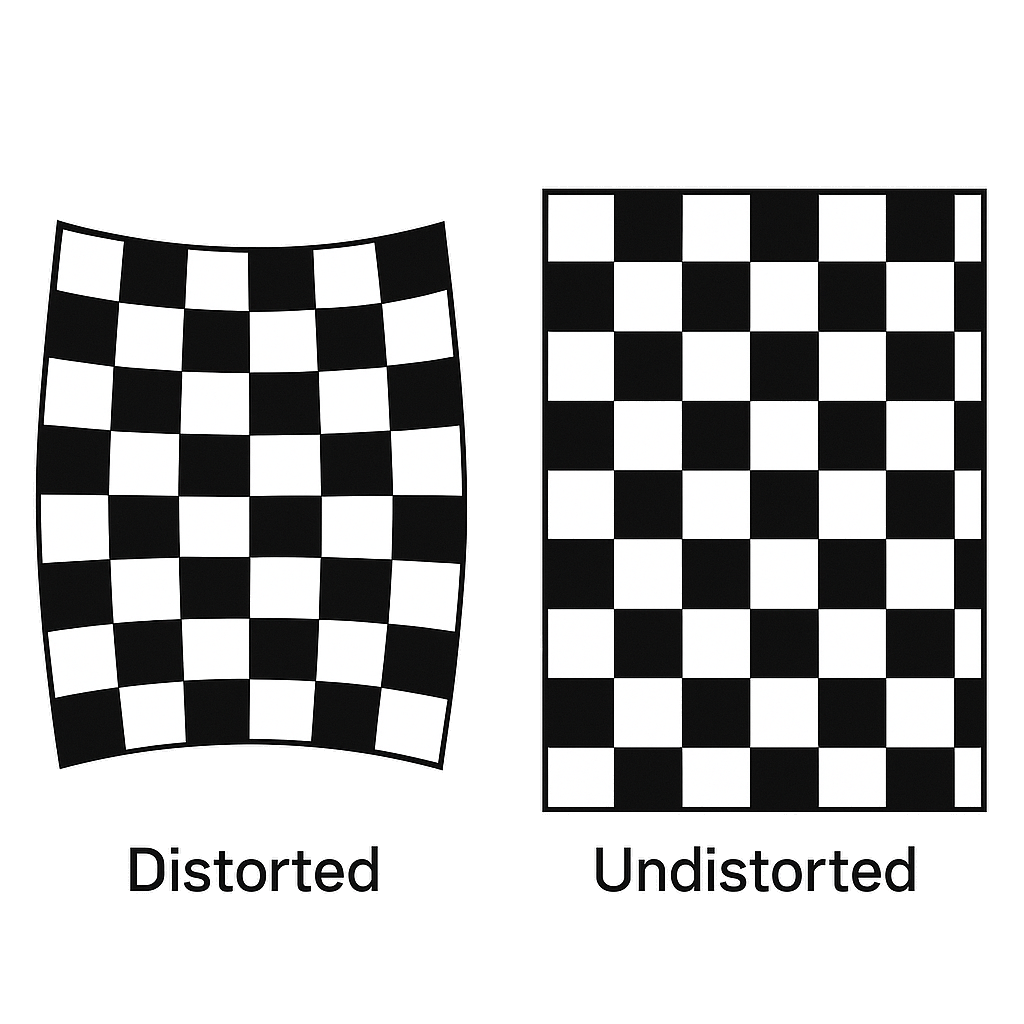

The Calibration Procedure

The coordination process is achieved by introducing a known reference point or object and forcing the robot and camera to work together.

The robot is commanded to move to a series of defined positions while the camera simultaneously observes a target (often the same grid used for lens calibration). By correlating the robot's known joint positions (which define the robot’s coordinate system) with the pixel locations of the target as seen by the camera (the camera’s coordinate system), the system can compute the mathematical transformation.

In Summary

Hand-eye coordination links the camera’s field of view directly to the robot’s physical motion.

It is critical for robot guidance applications, enabling a robot to locate and interact with objects based on visual data.

The calibration computes a transformation matrix that converts coordinates from the camera’s frame of reference to the robot’s frame of reference.

The two main setups are a static camera above the workspace and a moving camera mounted to the robot arm, both requiring a unique coordination matrix.